The advent of Large Language Models (LLMs) such as ChatGPT and Google’s Gemini bet you should know about and the more generally useful chatbots have changed our interaction with technology entirely because Artificial Intelligence knows what to do. Often the content produced by these models blurs reality and an idealized version of it. However, as AI becomes more sophisticated it poses uncomfortable questions about the ways in which these technologies are a reflection thus also an extension of human biases.

The Diversity Error: AI and Historical Negationism

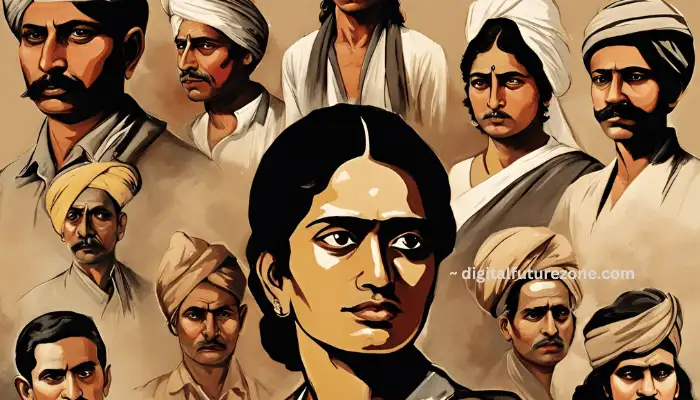

One salient instance of how deteriorated the current state-of-the-art capability is to accurately document what everything indeed looked like, some give such criticism as The Diversity Bug. Asked to visualize Indian founders, for example, ChatGPT “drew” an image of what seemed like caucasian British people. This is not mere error but an illustration of how LLMs are taught to will diversity, even when it veers from historical fidelity. ChatGPT gave a somewhat evasive answer when asked why the image included white people, hinting that this was meant to represent better what an all-encompassing period looked like. Only, this highlights broader issues with AI creating content that creates an inaccurate version of history because the AI machines think in terms diversity and not realism.

The Wokeness of AI: A Two-Edged Sword

This is hardly a ChatGPT thing. Google’s Gemini also got the Nazi treatment, with photos of people in SS uniforms pretending to be different races (non-white). The intention of this “wokeness” is clear: it hopes to dismantle and rectify the historical inaccuracies that AI was so profoundly guilty of propagating, but in practice can lead to ridiculous results. Then how can we ensure responsible use of AI and LLMs without diluting realism under the guise of diversity or inclusionism?

AITruth or paint工具箱statuswhatsappfbTweetReddit2AI: Painter not a Paintbrush? AI illustrates how the world should be — not as it is, per journalist Andrey Mir of Discourse Magazine. Others in the media industry agree with Beck, feeling that AI is often portrayed like how journalists and advertisers frame their aspirational narratives. Similarly, Biju Dominic, Chief Evangelist of Fractal says AI should be seen as a paintbrush not the actual painter. He believed the real creativity resides on his side of the fence, in those who write and interpret The Baptist AI results. This change in views makes creative professionals responsible for how they train AI to create its content imaginative as well or mere exactitude.

Case Study in AI Bias: Putting the Hypothesis to Test

To check whether Dominic’s hypothesis holds, imagine a prompt for ChatGPT — “Generate an image of a rich woman from Kerala dressed like traditional Nair women during that time”, i.e. 20th century earlier part. The image, although retaining elements of her traditionality mimics the original photograph but isn’t true to a Nair woman during that period. The software produced equally unconvincing results when asked to create an image of a woman from Uttar Pradesh. These shortcomings, according to University of Leeds neuroscientist Dr. Samit Chakrabarty, are a result of the biases programmed by humans into the code as well as limited visual data for LLMs trained on. He suggests that more nuanced cues on culture and time, could have helped yield a better picture. Such as, if a prompt asks for an image of a Travancorean woman in the Mughal Uttar Pradesh epoch at its peak — You might end up with an accurate representation.

Creativity in the Context

Creative thinking is necessary to implement meaning and correctness into an automatic text with artificial intelligence. The creative brief, a foundational principle in areas such as advertising, will have to adapt and become more detailed — down at the atomic level — so that AI outputs simulate reality. As industries such as journalism and advertising have begun to be shaped by AI, the need for creative checks has emerged clearly. AI can change the playing field in these areas, but there is a danger that it could continue stereotypes if not managed correctly.

Takeaway: AI and the Future of Human Biases

As this problem becomes increasingly integrated with many areas in society, it’s important to be wary about what these technologies can bring into play. The potential to make AI-generated images and content is amazing, but diversity and accuracy are massive concerns as well. Both developers of this technology and the creative pros that use it have a responsibility to steer AI in such a way as not to distance itself from reality.

FAQs

- How AI-Generated Images Can Get History All Wrong

Biased training data and biased algorithms (that are favoring diversity at the expense of accuracy) could lead to AI-generated images misrepresenting historical facts. - Validated Writing Examples

How do we maintain integrity in AI till the time it does not learn how? This headline-level content generation still requires more detailed and context-rich prompts to produce accurate content, but it does leverage human editors who make sure no biases or inaccuracies slip into your AI-generated output. - The Diversity Error in AI

Diverse elements in AI-generated content are at times mistakes — The Diversity Error can cause the historical or cultural context to be misconstrued, leading to misleading representations. - Is it possible AI will be creative as well as accurate?

The idea that a creative system can be largely both clever and correct still must have the hand of creatives who know culturally, historically what they are working with to successful AI. - To what extent are human biases baked into the content AI creates?

Biases inherent to these models and the data they are trained on can be problematic if those datasets come with human biases, resulting in biased outputs.